February 13, 2016 @ 11:34

Machine learning with Ruby, Tic Tac Toe

I said in a previous post I was going to learn Ruby. I was also interested in machine learning.

So, I did both with this Tic Tac Toe project.

It's a Ruby project, and the code is currently a bit of a mess, but it's on Github if you want to check it out.

Machine learning seems to me like a great way to program a computer to play the game.

I certainly don't want to learn the game, then program the computer.

What I've done in the end is a type of machine learning, called reinforced learning.

I had a chat with computer scientist Flora Salim, who was able to tell me more about the nature of my program.

Dr Salim said what I made was machine learning, sort of, but more specifically it is Reinforcement Learning (RL).

"RL is a sub area of AI, which gets agents to learn and re-learn from the environment and previous conditions."

RL was not strictly machine learning, she said via email.

"Mainly it is considered as a branch of AI, given the mixture of other AI techniques required in RL."

Program structure

The program's a combination of functional and object-oriented programming.

First, there are three classes. One for the dumb bots (see the next section), one for the PlayerHuman (you) and one for your AI opponent.

The rest of the program is functions and loops for iterating through as many games as possible.

Spinning up some AI

To generate the AI, I run through a few million games with two dumb bots.

These dumb bots have no memory, and they just randomly place markers.

The point is to see what the best next move is for every current board state.

To do this, the program spins up two new objects from the PlayerDumb class.

One ix X. One is O. Both have instance variables called @results where I save their results.

There is a json file, which I convert to a hash table in Ruby.

So on a win condition, it loops back through the winner's moves and creates a hash.

For the loser, I loop through the board states and deduct points.

That way, wins are best, losses are worst, draws are in between.

Human vs computer

The program cleans up the hash table from the dumb bots game. For every board state object

The results from the dumb bots is saved in a json file.

So for the starting board state object, where '-' refers to a blank space:

"---------":{

"3":71628,

"5":73628,

"8":123790,

"4":179720,

"2":123530,

"7":71603,

"0":123325,

"1":70988,

"6":124242

}

The resulting hash key and value become:

"---------" => 4

This is the centre square, which is indeed the best place to start a game, according to the AI.

The AI is not perfect but plays well enough to be entertaining.

Problems with the program

The data file grows very quickly, in terms of the numbers of keys and values saved.

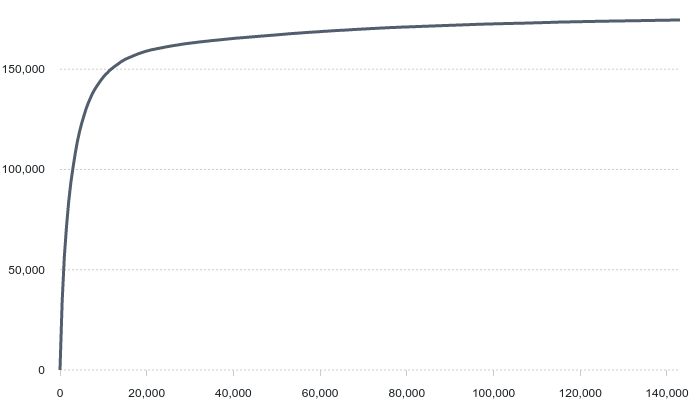

This chart shows the size of the file in bytes (y axis) when 500 games are played per file save (x axis).

It got to 175kb before there was an error.

If smaller amounts of games are used per file save, then the program takes longer.

It took roughly 112 seconds to get through 143,000 games on 500 games per iteration with the dumb bots objects.

Seconds_passed, games, file_bytes, results_file_size

0, 500, 34184, 1509 #first save

112, 143000, 174547, 4400

It crashed here, since the program could not save the current file before saving the next one.

Therefore, while saving, the data file becomes invalid json.

This occurs because I don't yet know how to implement callbacks in Ruby.

But for now, the solution is to do more games per save - 50,000 per save.

I can get up around 3 million games before an error if I save at intervals of 50,000 on my laptop.

I believe if I figure out callbacks in Ruby, I could leave this running on my VPS for a week.

The AI would be quite good!